The Tama System

Tama is at its heart a system for the exploration of conversational interaction.

The design process began with examining how people communicate with and around a gaze-enhanced speech agent, leading to the creation of Tama to leverage conversational dynamics. This led Tama's creation through iterative cycles, incorporating frequent tests, workshops, user feedback, and evaluations to refine the design. The hardware and software were continuously updated to enhance interaction design. The design underwent four phases, each involving testing to enhance user interaction with a gaze-aware conversational agent across various contexts and settings for both individual and group use.

In 2018, we started the design of Tama, reimagining the smart speaker as a dynamic participant in conversations, mirroring the engaging presence of a human partner. Inspired by cutting-edge research, Tama was conceived to go beyond traditional voice-activated assistants through the use of mutual-gaze. This approach is rooted in the insight that the natural exchange of glances could offer a more intuitive interaction than conventional wake words, aiming to solve the challenge of overlapping speech in lively home environments. Leveraging advancements in gaze detection, Tama was designed to understand and participate in conversations seamlessly, promising an interaction that feels as natural and effortless as chatting with a friend. Our vision was to pioneer a gaze-augmented speech agent that not only enhances communication but does so with unprecedented accuracy and ease. Tama represents our commitment to pushing the boundaries of technology to create more human-centric experiences.

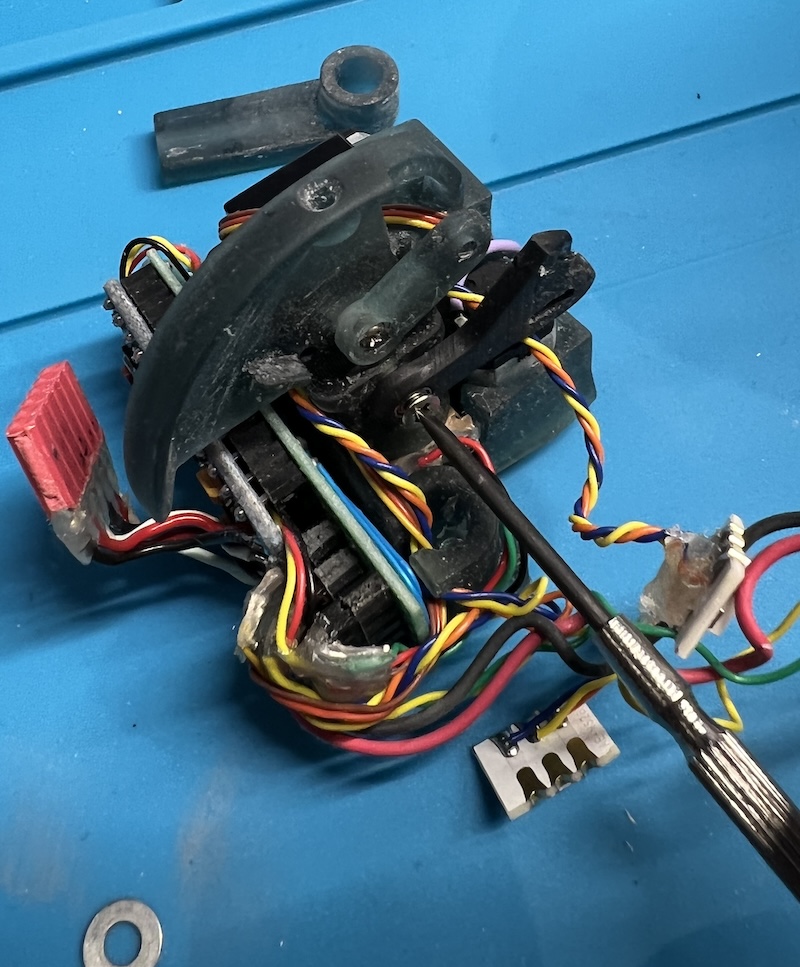

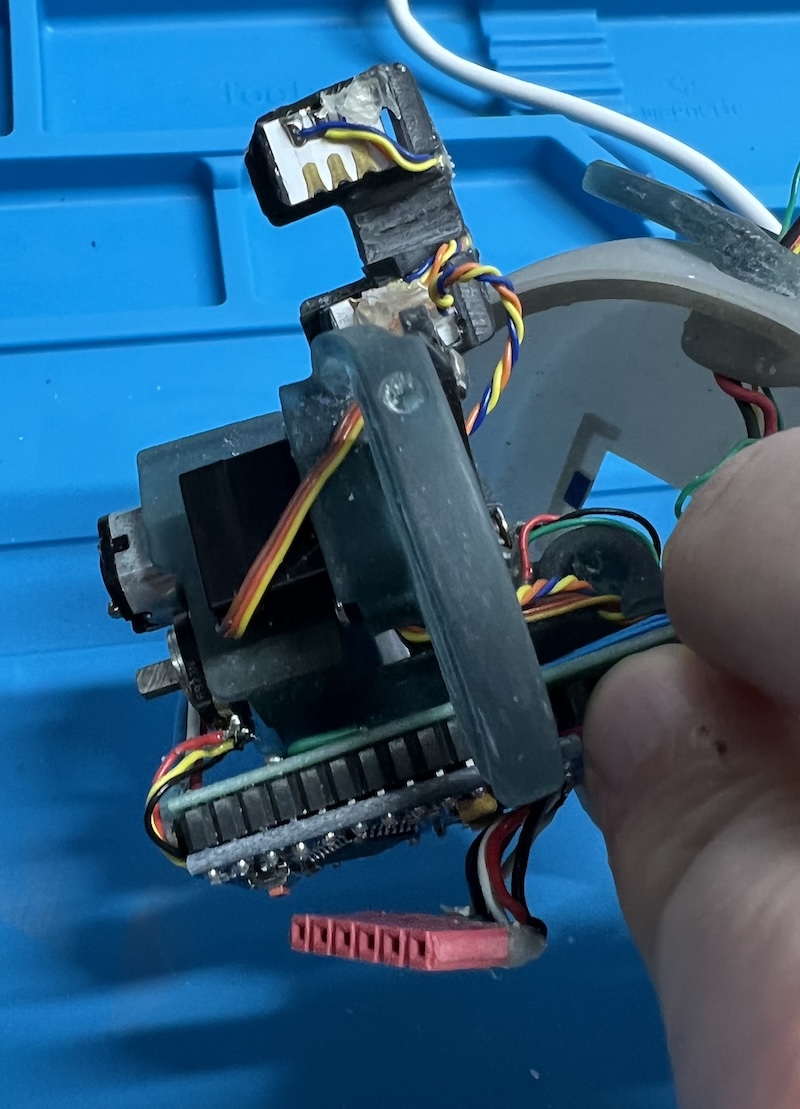

Unlike traditional devices such as Amazon Alexa or Google Home, Tama enhances interaction through an innovative gaze response system. This feature allows Tama to engage with users the moment they look at the device, a concept that predated Google's `Look and Talk' innovation. Tama redefines the essence of `always listening' by integrating visual feedback into its design. It features a unique retractable spherical head, adorned with two vibrant full-color LED eyes capable of 180 degrees of lateral movement and 60 degrees of vertical motion. This innovative design is supported by a sleek, tapered cylindrical body, which houses two cutting-edge OMRON cameras, a seven-microphone array (ReSpeaker v2.0), twelve full-color LEDs, a loudspeaker, and a Raspberry Pi. This not only captures but also reciprocates the user's gaze, facilitating a more intuitive and engaging form of interaction through mutual gaze in speech communication.

The heart of Tama's gaze interaction lies in its sophisticated OMRON HVC-P2 cameras, boasting a sensor resolution of 1600×1200 and capable of estimating gaze at 4 Hz from up to 3 meters away. While the specifics of the eye-tracking features remain proprietary, these cameras leverage hardware-accelerated machine learning models to deliver real-time face and gaze estimation. Compact and power-efficient thanks to their FPGA implementation, the cameras require no personal calibration and continuously provide face and gaze data via USB or serial communication, drawing less than 0.4 A. This cutting-edge technology forms the cornerstone of Tama's design, allowing for a seamless integration of gaze-based interaction in its prototyping and testing phases. For more information on the technology behind our gaze detection cameras, visit Omron's technology page.

Tama's software framework was created using the open-source conversational agent from Mycroft.ai. This was augmented with a browser-based Wizard-of-Oz (WoZ) dashboard, enabling researchers to manipulate the device's eye movements and color, as well as to craft and manage phrases spoken through Google's text-to-speech technology.

Tama's platform allows interaction through both voice commands and gaze-based inputs and outputs, with the wizard interface overseeing the responses.

Following a patent troll bancrupting Mycroft.ai, the Tama platform is in the process of being moved to the alternative Open Voice Operating System.